26 KiB

Question:

如何解决采用金丝雀部署时间代价的问题。

场景:一个较大项目通过滚动部署正常大约需要1小时,重要服务的部署一般会放在凌晨开展,通过金丝雀部署,canary版本验证时间会串入总时间,版本验证时间如消耗1小时,那么意味着运维人员至少晚1个小时回家。

所以,目前的做法是测试完的服务,滚动部署完成所有服务生产部署后,支撑人员做一个UAT,没问题结束,有问题回滚待下一次上线。

=> 改进

1. 自动化测试程度提高,canary版本可以通过自动化方式验证,从而节省测试串入时间。

2. canary版本可以通过自动化一键验证(比如通过加header的方式让自动化测试验证匹配header value的canary版本),验证成功直接patch到旧版本,验证不成功,删除canary版本,相当于回滚,且不影响生产用户。

3. header value + weight 方式发布,可以让部分体验用户使用新功能,体验app?

第一篇:K8S三种部署策略

1. 部署策略对比

分别对滚动部署、蓝绿部署和金丝雀部署进行对比

滚动部署

应用的新版本逐步替换旧版本。实际的部署发生在一段时间内。在此期间,新旧版本会共存,而不会影响功能和用户体验。这个过程可以更轻易的回滚和旧组件不兼容的任何新组件。

蓝绿部署

应用的新版本部署在绿色版本环境中,进行功能和性能测试。一旦测试通过,应用的流量从蓝色版本路由到绿色版本。然后绿色版本变成新的生产环境。在这个方法中,两个相同的生产环境并行工作。

金丝雀部署

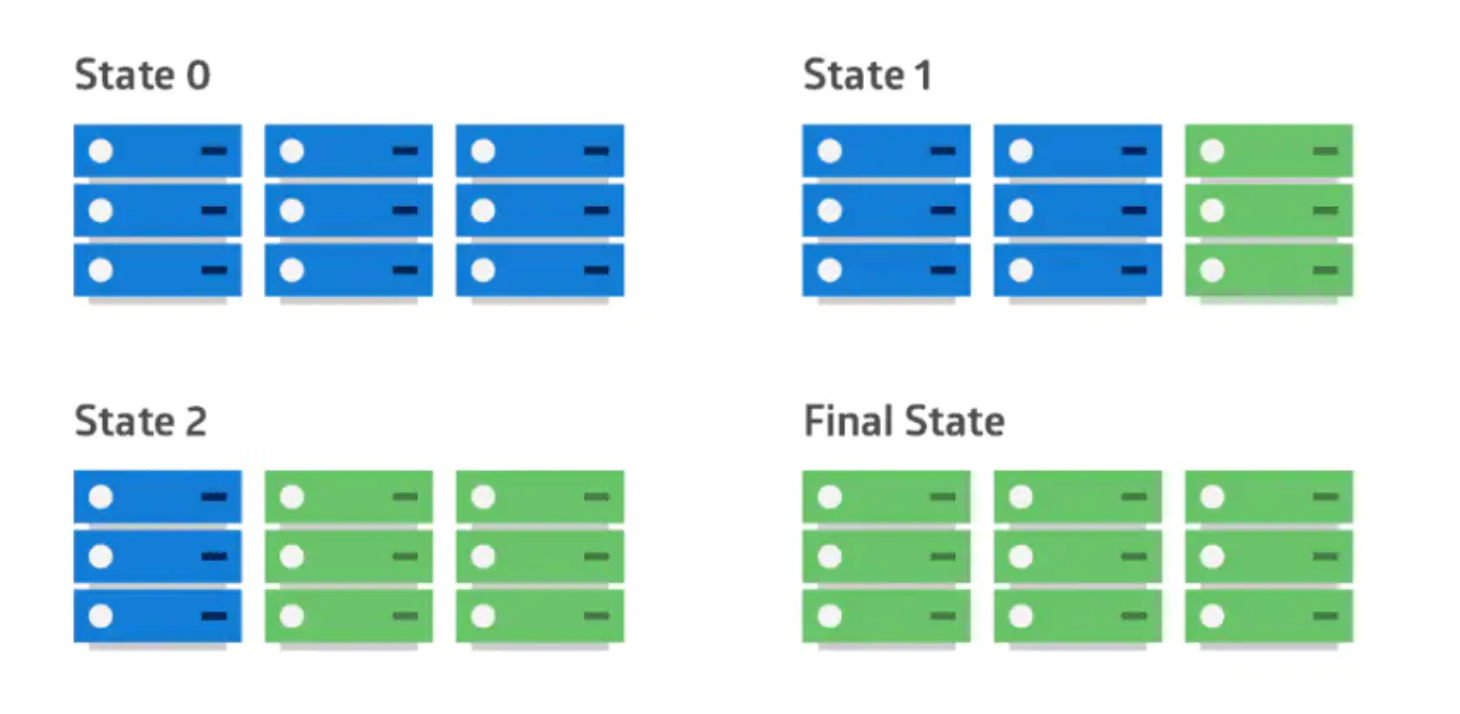

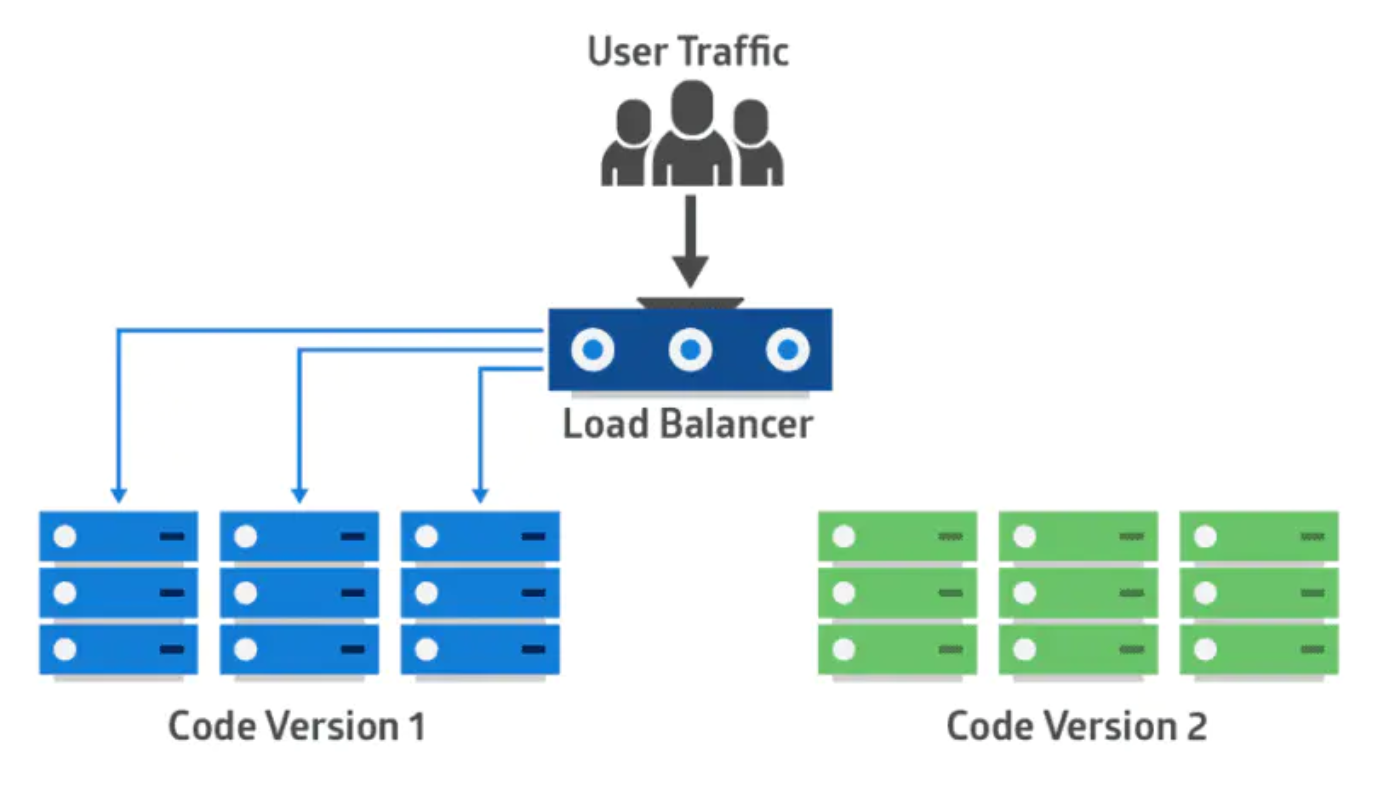

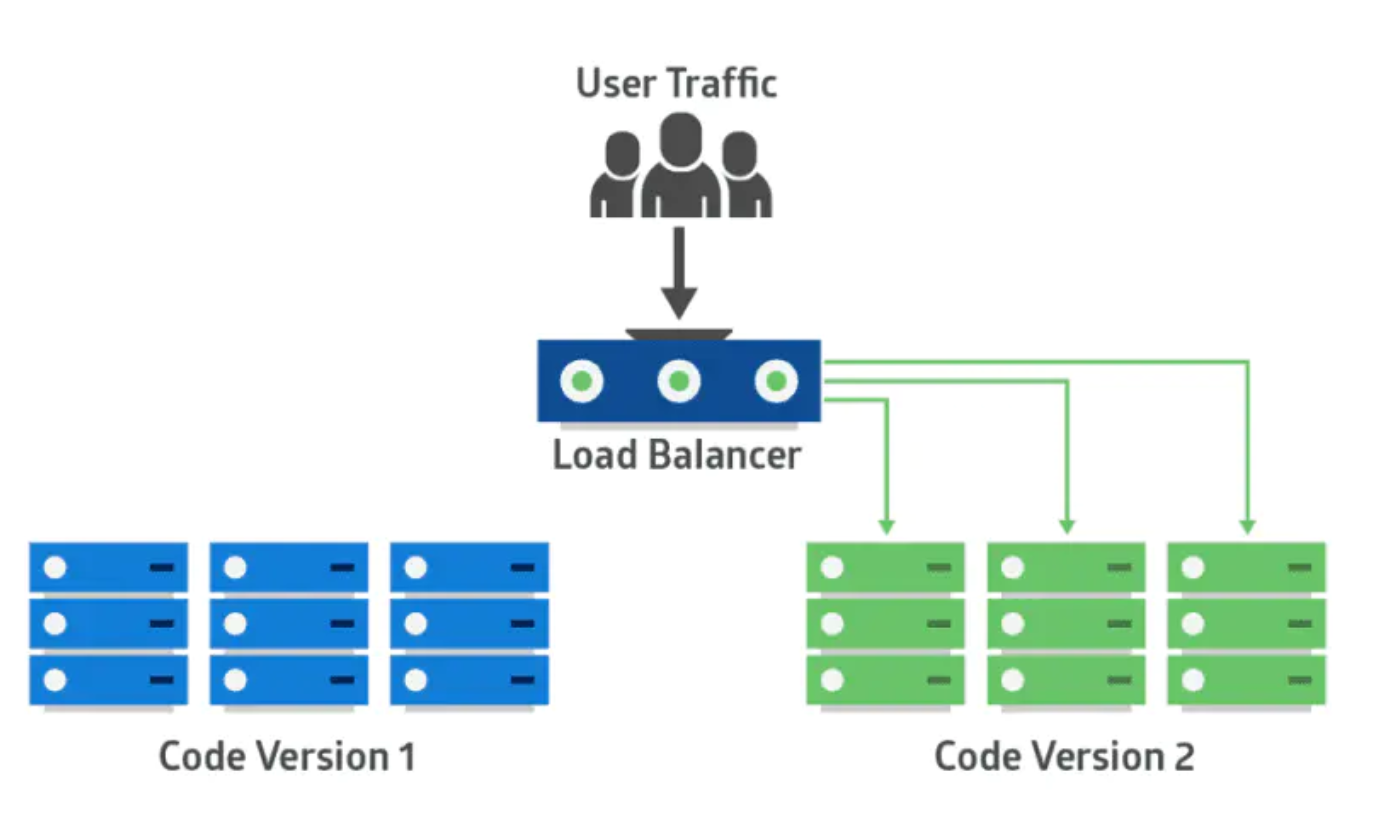

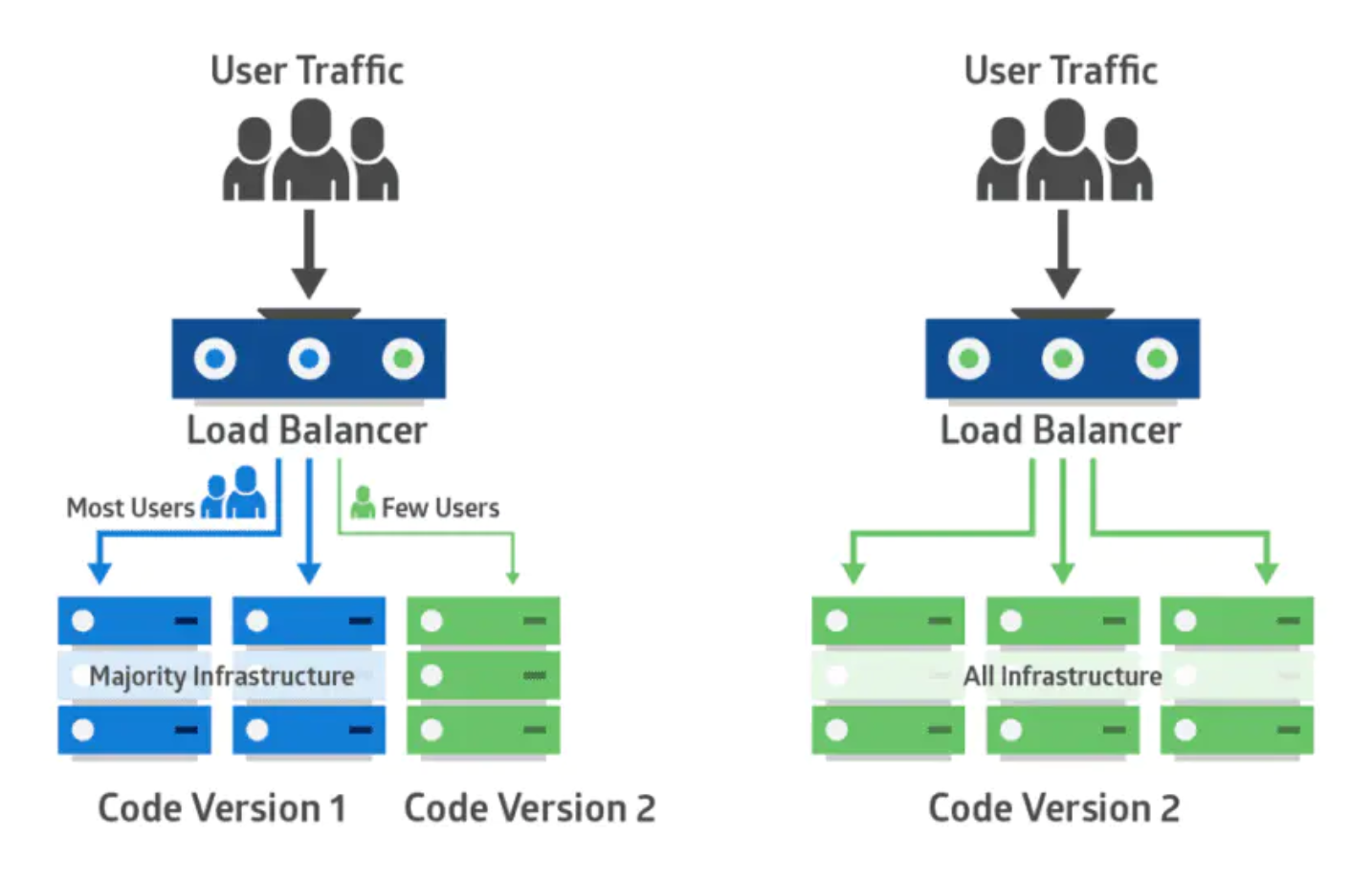

采用金丝雀部署,你可以在生产环境的基础设施中小范围的部署新的应用代码。一旦应用签署发布,只有少数用户被路由到它。最大限度的降低影响。如果没有错误发生,新版本可以逐渐推广到整个基础设施。以前旷工开矿下矿洞前,先会放一只金丝雀进去探是否有有毒气体,看金丝雀能否活下来,金丝雀发布由此得名。下图示范了金丝雀部署:

金丝雀部署包括将生产流量从版本A逐渐转移到版本B。通常,流量是根据权重分配的。 例如,90%的请求发送到版本A,10%的请求发送到版本B。

2. 使用Kubernetes实现金丝雀部署

主要步骤:

- 部署v1版本的应用,此时service访问的都是v1版本的服务

- 部署v2版本,数量为x/10,同时缩小v1版本的数量x/10,此时有x/10的流量到v2版本的服务

- 逐步缩小v1,扩大v2,最终v2版本替换全部的v1

2.1 搭建模拟的服务

app-v1.yaml : https://github.com/ContainerSolutions/k8s-deployment-strategies/blob/master/canary/native/app-v1.yaml

apiVersion: v1

kind: Service

metadata:

name: my-app

labels:

app: my-app

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: http

selector:

app: my-app

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-v1

labels:

app: my-app

spec:

replicas: 10

selector:

matchLabels:

app: my-app

version: v1.0.0

template:

metadata:

labels:

app: my-app

version: v1.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v1.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

periodSeconds: 5

$kubectl apply -f app-v1.yaml

service/my-app created

deployment.apps/my-app-v1 created

$kubectl get service my-app

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-app NodePort 10.98.198.198 <none> 80:30969/TCP 23m

$curl 10.98.198.198:80

Host: my-app-v1-c9b7f9985-5qvz4, Version: v1.0.0

2.2 应用使用金丝雀部署方式来升级

接下来,我们对my-app-v1升级到my-app-v2:

app-v2.yaml : https://github.com/ContainerSolutions/k8s-deployment-strategies/blob/master/canary/native/app-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-v2

labels:

app: my-app

spec:

replicas: 1

selector:

matchLabels:

app: my-app

version: v2.0.0

template:

metadata:

labels:

app: my-app

version: v2.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v2.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

periodSeconds: 5

开启watch来监控pod和deployment的变化

$kubectl get --watch deployment

$kubectl get --watch pod

升级

$kubectl apply -f app-v2.yaml

deployment.apps/my-app-v2 created

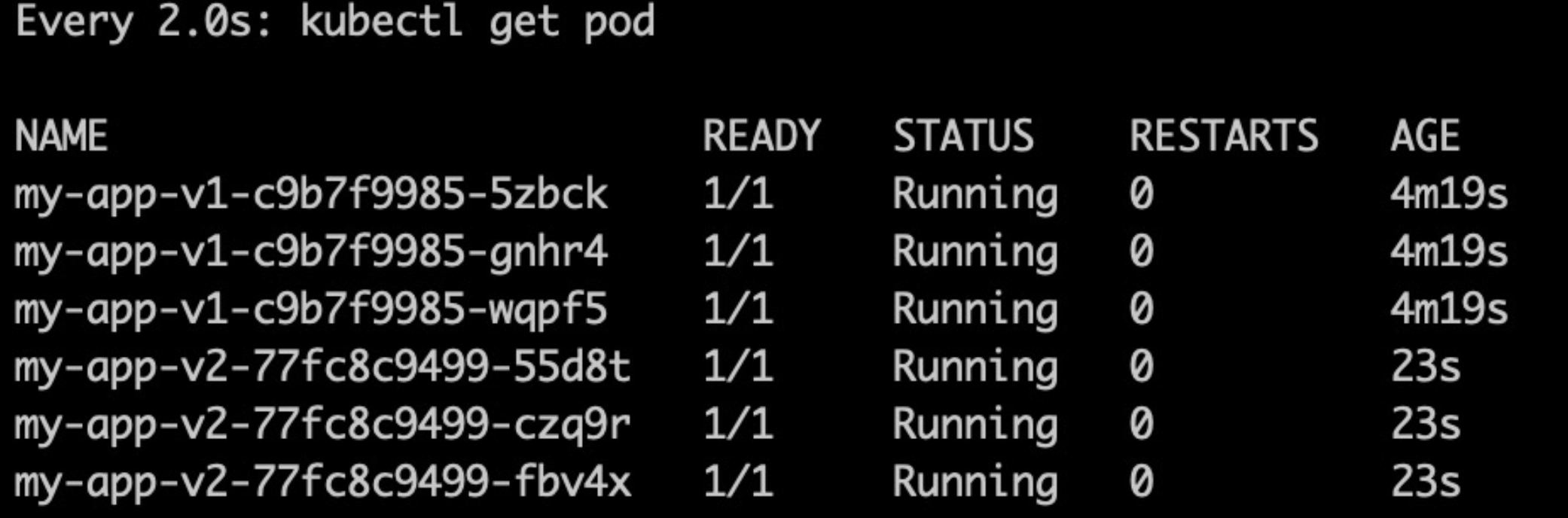

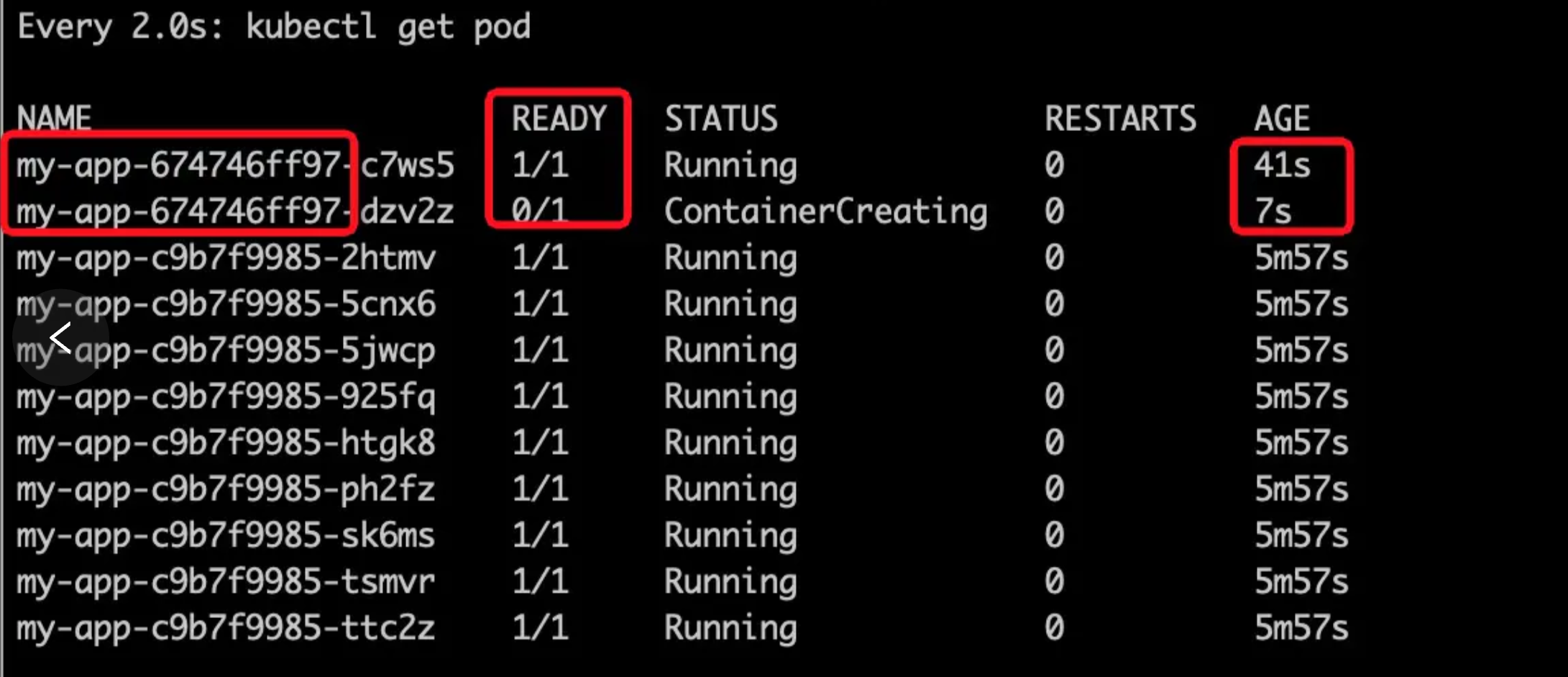

此时可以看到,my-app-v2启动了1个

$kubectl get --watch deployment

NAME READY UP-TO-DATE AVAILABLE AGE

my-app-v1 10/10 10 10 45m

my-app-v2 1/1 1 1 46s

$kubectl scale --replicas=9 deploy my-app-v1

deployment.apps/my-app-v1 scaled

$kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

my-app-v1 9/9 9 9 47m

my-app-v2 1/1 1 1 2m48s

此时,我们将my-app-v1 缩小到9个,这样通过service的负载均衡,my-app-v2会承接到%10(1/20)的流量

$service=10.98.198.198:80

$while sleep 0.1; do curl "$service"; done

Host: my-app-v1-c9b7f9985-mqnmr, Version: v1.0.0

Host: my-app-v1-c9b7f9985-bl4g7, Version: v1.0.0

Host: my-app-v1-c9b7f9985-rmng9, Version: v1.0.0

Host: my-app-v1-c9b7f9985-mz9hc, Version: v1.0.0

Host: my-app-v1-c9b7f9985-bl4g7, Version: v1.0.0

Host: my-app-v1-c9b7f9985-mz9hc, Version: v1.0.0

Host: my-app-v1-c9b7f9985-mm6fp, Version: v1.0.0

Host: my-app-v2-77fc8c9499-m6n9j, Version: v2.0.0

Host: my-app-v1-c9b7f9985-l69pf, Version: v1.0.0

Host: my-app-v1-c9b7f9985-mqnmr, Version: v1.0.0

Host: my-app-v1-c9b7f9985-mz9hc, Version: v1.0.0

Host: my-app-v1-c9b7f9985-62zb4, Version: v1.0.0

验证通过后,我们逐步将my-app-v2扩容到10个,将my-app-v1缩小到0个

$kubectl scale --replicas=10 deploy my-app-v2

$kubectl delete deploy my-app-v1

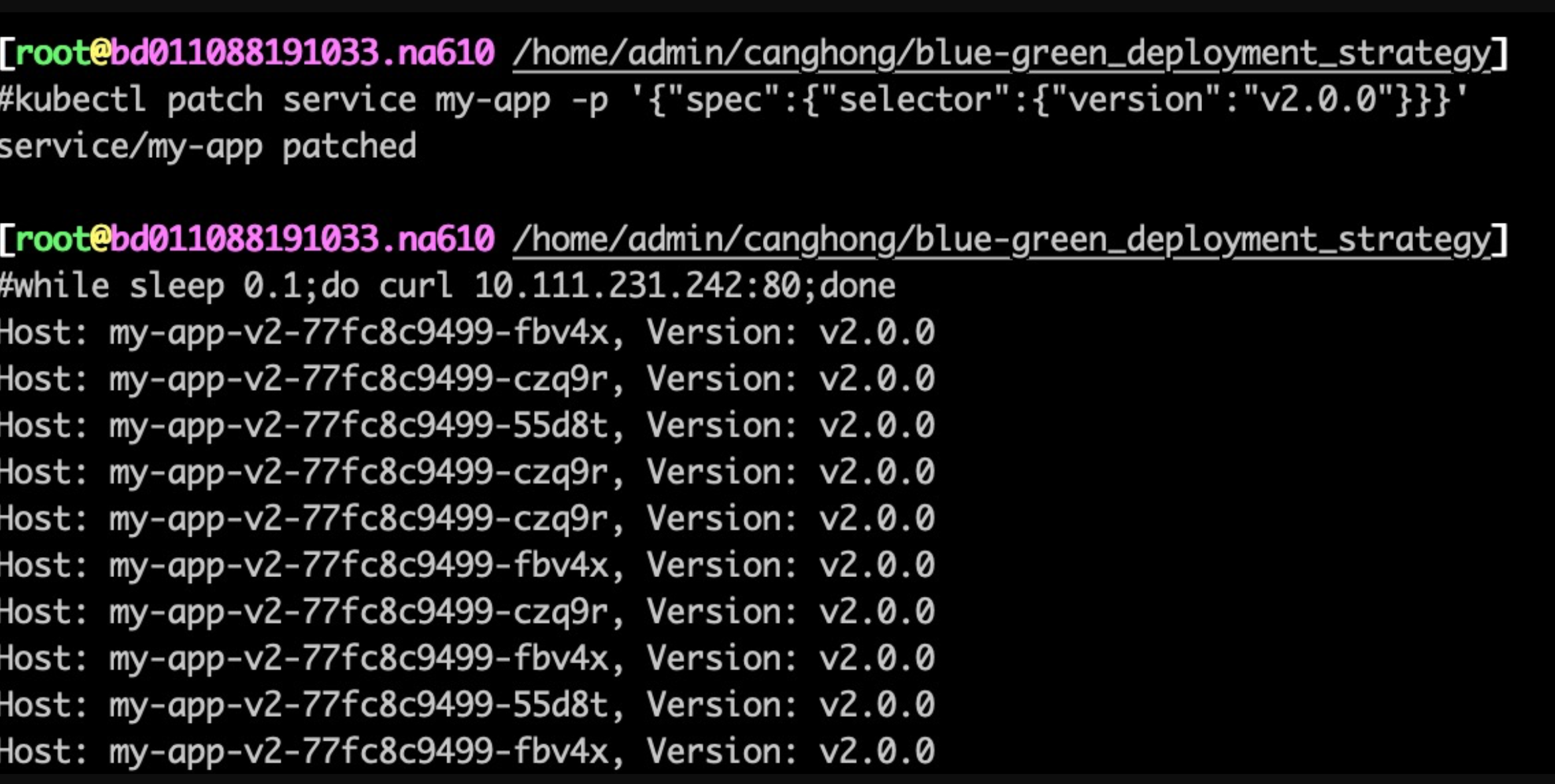

再次验证服务,会发现my-app-v2承接了所有流量:

$while sleep 0.1; do curl "$service"; done

测试完成清理数据

$kubectl delete all -l app=my-app

3. 使用Kubernetes实现蓝绿部署

主要步骤:

- 部署v1版本 ,此时service访问的都是v1版本的服务

- 部署v2版本,直到部署完成

- 将service的流量从v1版本切换到v2版本

- 销毁v1

首先,通过如下命令监控pod的实时状态

$watch kubectl get pod

3.1 搭建模拟的服务

apiVersion: v1

kind: Service

metadata:

name: my-app

labels:

app: my-app

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: http

# Note here that we match both the app and the version

selector:

app: my-app

version: v1.0.0

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-v1

labels:

app: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

version: v1.0.0

template:

metadata:

labels:

app: my-app

version: v1.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v1.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

periodSeconds: 5

部署服务和v1版本

$kubectl apply -f app-v1.yaml

service/my-app created

deployment.apps/my-app-v1 created

$kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

my-app NodePort 10.111.231.242 <none> 80:31540/TCP 18s

$while sleep 0.1;do curl 10.111.231.242:80;done

Host: my-app-v1-c9b7f9985-wqpf5, Version: v1.0.0

Host: my-app-v1-c9b7f9985-wqpf5, Version: v1.0.0

Host: my-app-v1-c9b7f9985-gnhr4, Version: v1.0.0

3.2 部署v2版本

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-v2

labels:

app: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

version: v2.0.0

template:

metadata:

labels:

app: my-app

version: v2.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v2.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

periodSeconds: 5

部署完成后,我们可以看到,总共个部署了两个版本的deployment。有3个pod是v1,另外3个是v2的。而当前service访问的都是v1版本的服务

$kubectl patch service my-app -p '{"spec":{"selector":{"version":"v2.0.0"}}}'

此时可以看到,服务的流量都到了v2

$kubectl delete deploy my-app-v1

若有问题,可以回滚

$kubectl patch service my-app -p '{"spec":{"selector":{"version":"v1.0.0"}}}'

4. 使用Kubernetes实现滚动部署

主要步骤:

- 部署v1版本 ,此时service访问的都是v1版本的服务

- 设置v2版本,且更新策略为滚动更新

- 部署v2版本

4.1 搭建模拟的服务

app-v1.yaml: https://github.com/ContainerSolutions/k8s-deployment-strategies/blob/master/ramped/app-v1.yaml

apiVersion: v1

kind: Service

metadata:

name: my-app

labels:

app: my-app

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: http

selector:

app: my-app

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

labels:

app: my-app

spec:

replicas: 10

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

version: v1.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v1.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

periodSeconds: 5

部署app-v1.yaml

$kubectl apply -f app-v1.yaml

service/my-app created

deployment.apps/my-app created

$kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

my-app NodePort 10.100.43.22 <none> 80:32725/TCP 45s

$curl 10.100.43.22:80

Host: my-app-c9b7f9985-ph2fz, Version: v1.0.0

4.2 接下来准备进行滚动升级

通过如下命令监控pod的变化

$watch kubectl get pod

app-v2.yaml : https://github.com/ContainerSolutions/k8s-deployment-strategies/blob/master/ramped/app-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

labels:

app: my-app

spec:

replicas: 10

# Here we define the rolling update strategy

# - maxSurge define how many pod we can add at a time

# - maxUnavailable define how many pod can be unavailable

# during the rolling update

#

# Setting maxUnavailable to 0 would make sure we have the appropriate

# capacity during the rolling update.

# You can also use percentage based value instead of integer.

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

# The selector field tell the deployment which pod to update with

# the new version. This field is optional, but if you have labels

# uniquely defined for the pod, in this case the "version" label,

# then we need to redefine the matchLabels and eliminate the version

# field from there.

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

version: v2.0.0

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9101"

spec:

containers:

- name: my-app

image: containersol/k8s-deployment-strategies

ports:

- name: http

containerPort: 8080

- name: probe

containerPort: 8086

env:

- name: VERSION

value: v2.0.0

livenessProbe:

httpGet:

path: /live

port: probe

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: probe

# Intial delay is set to a high value to have a better

# visibility of the ramped deployment

initialDelaySeconds: 15

periodSeconds: 5

开始升级

$kubectl apply -f app-v2.yaml

deployment.apps/my-app configured

同时可以看到pod正在被逐步替换

image.png

在逐步替换的过程中,为了验证流量,可以随时暂停升级,暂停恢复命令如下

image.png

在逐步替换的过程中,为了验证流量,可以随时暂停升级,暂停恢复命令如下

$kubectl rollout pause deploy my-app

deployment.apps/my-app paused

$kubectl rollout resume deploy my-app

deployment.apps/my-app resumed

第二篇:K8S基于ingress-nginx实现灰度发布

之前介绍过使用ambassador实现灰度发布,今天介绍如何使用ingre-nginx实现。

介绍

Ingress-Nginx 是一个K8S ingress工具,支持配置 Ingress Annotations 来实现不同场景下的灰度发布和测试。

Nginx Annotations 支持以下 4 种 Canary 规则:

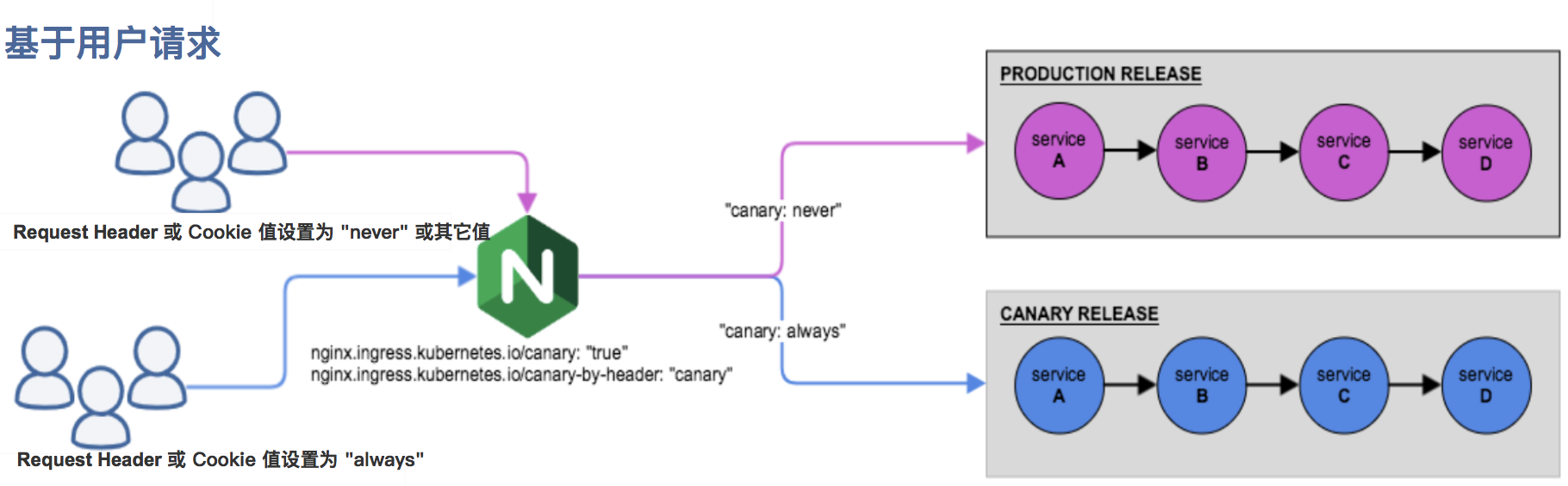

nginx.ingress.kubernetes.io/canary-by-header:

基于 Request Header 的流量切分,适用于灰度发布以及 A/B 测试。当 Request Header 设置为 always时,请求将会被一直发送到 Canary 版本;当 Request Header 设置为 never时,请求不会被发送到 Canary 入口;对于任何其他 Header 值,将忽略 Header,并通过优先级将请求与其他金丝雀规则进行优先级的比较。

nginx.ingress.kubernetes.io/canary-by-header-value:

要匹配的 Request Header 的值,用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务。当 Request Header 设置为此值时,它将被路由到 Canary 入口。该规则允许用户自定义 Request Header 的值,必须与上一个 annotation (即:canary-by-header)一起使用。

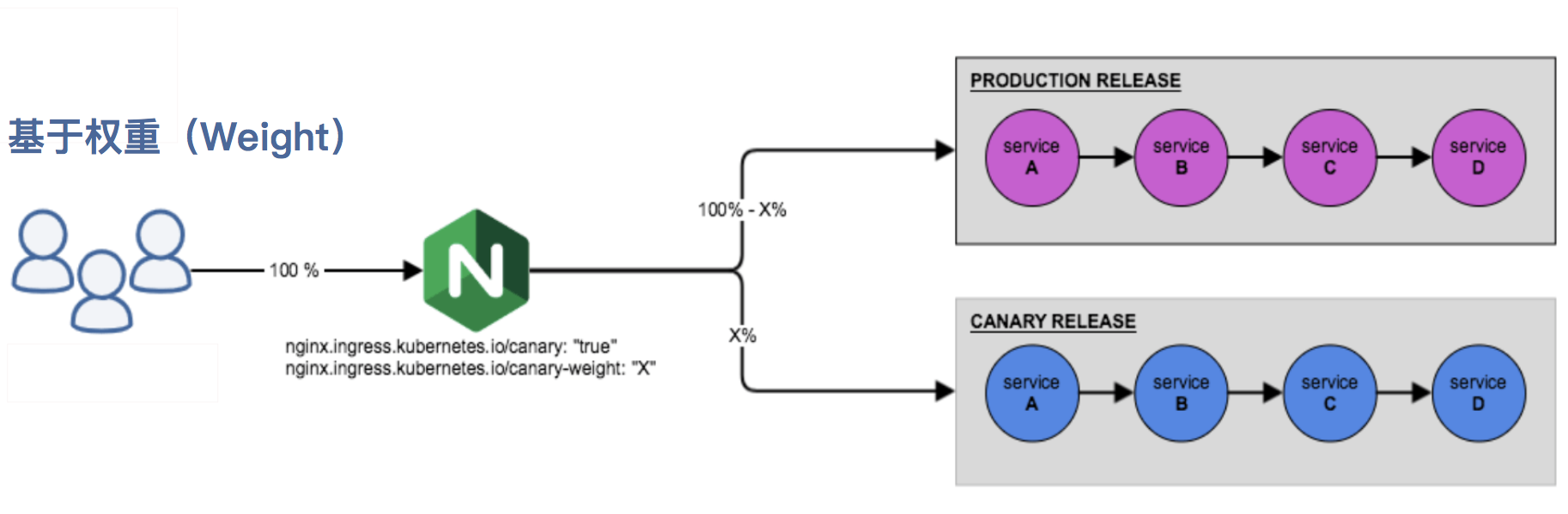

nginx.ingress.kubernetes.io/canary-weight:

基于服务权重的流量切分,适用于蓝绿部署,权重范围 0 - 100 按百分比将请求路由到 Canary Ingress 中指定的服务。权重为 0 意味着该金丝雀规则不会向 Canary 入口的服务发送任何请求。权重为 100 意味着所有请求都将被发送到 Canary 入口。

nginx.ingress.kubernetes.io/canary-by-cookie:

基于 Cookie 的流量切分,适用于灰度发布与 A/B 测试。用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务的cookie。当 cookie 值设置为 always时,它将被路由到 Canary 入口;当 cookie 值设置为 never时,请求不会被发送到 Canary 入口;对于任何其他值,将忽略 cookie 并将请求与其他金丝雀规则进行优先级的比较。

注意:金丝雀规则按优先顺序进行如下排序:

canary-by-header - > canary-by-cookie - > canary-weight

我们可以把以上的四个 annotation 规则可以总体划分为以下两类:

注意: Ingress-Nginx 实在0.21.0 版本 中,引入的Canary 功能,因此要确保ingress版本OK

测试

应用准备

两个版本的服务,正常版本:

import static java.util.Collections.singletonMap;

@SpringBootApplication

@Controller

public class RestPrometheusApplication {

@Autowired

private MeterRegistry registry;

@GetMapping(path = "/", produces = "application/json")

@ResponseBody

public Map<String, Object> landingPage() {

Counter.builder("mymetric").tag("foo", "bar").register(registry).increment();

return singletonMap("hello", "ambassador");

}

public static void main(String[] args) {

SpringApplication.run(RestPrometheusApplication.class, args);

}

}

访问会输出:

{"hello":"ambassador"}

灰度版本:

import static java.util.Collections.singletonMap;

@SpringBootApplication

@Controller

public class RestPrometheusApplication {

@Autowired

private MeterRegistry registry;

@GetMapping(path = "/", produces = "application/json")

@ResponseBody

public Map<String, Object> landingPage() {

Counter.builder("mymetric").tag("foo", "bar").register(registry).increment();

return singletonMap("hello", "ambassador, this is a gray version");

}

public static void main(String[] args) {

SpringApplication.run(RestPrometheusApplication.class, args);

}

}

访问会输出:

{"hello":"ambassador, this is a gray version"}

ingress 配置

header

我们部署好两个服务,springboot-rest-demo是正常的服务,springboot-rest-demo-gray是灰度服务,我们来配置ingress,通过canary-by-header来实现:

正常服务的:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-rest-demo

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: springboot-rest.jadepeng.com

http:

paths:

- backend:

serviceName: springboot-rest-demo

servicePort: 80

canary 的:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-rest-demo-gray

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "canary"

nginx.ingress.kubernetes.io/canary-by-header-value: "true"

spec:

rules:

- host: springboot-rest.jadepeng.com

http:

paths:

- backend:

serviceName: springboot-rest-demo-gray

servicePort: 80

将上面的文件执行:

kubectl -n=default apply -f ingress-test.yml

ingress.extensions/springboot-rest-demo created

ingress.extensions/springboot-rest-demo-gray created

执行测试,不添加header,访问的默认是正式版本:

$ curl http://springboot-rest.jadepeng.com; echo

{"hello":"ambassador"}

$ curl http://springboot-rest.jadepeng.com; echo

{"hello":"ambassador"}

添加header,可以看到,访问的已经是灰度版本了

$ curl -H "canary: true" http://springboot-rest.jadepeng.com; echo

{"hello":"ambassador, this is a gray version"}

weight

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "50"

name: helloworld-weight

spec:

rules:

- host: hello.world.test

http:

paths:

- backend:

serviceName: hello-world-svc-v2

servicePort: 80

创建Ingress规则:

[root@vm10-0-11-201 ~]# kubectl apply -f helloworld-ingress.yaml

ingress.extensions/hello-world created

[root@vm10-0-11-201 ~]# kubectl apply -f weight-ingress.yaml

ingress.extensions/helloworld-weight created

[root@vm10-0-11-201 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

hello-world <none> hello.world.test 80 41s

helloworld-weight <none> hello.world.test 80 27s

验证访问情况 通过以下命令获取EXTERNAL-IP及访问服务:

[root@vm10-0-11-201 ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-ingress LoadBalancer 10.254.28.54 120.92.xx.xx 80:31741/TCP,443:32754/TCP 3h19m

[root@vm10-0-11-201 ~]# for i in $(seq 1 10); do curl -H "Host: hello.world.test" http://120.92.xx.xx; done;

Hello World v2!

Hello World v2!

Hello World v1!

Hello World v2!

Hello World v2!

Hello World v1!

Hello World v1!

Hello World v1!

Hello World v2!

Hello World v2!

多次访问能发现约50%的流量会被分发到v2版本服务中。

others

ingress-nginx 从 0.21.0 开始支持金丝雀(canary)模式,对应的 merge 是 3341。 Canary deploys with ingress-nginx 介绍了用法。

首先创建一个普通的 ingress A 指向 Service A:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/upstream-fail-timeout: "10"

nginx.ingress.kubernetes.io/upstream-max-fails: "2"

name: demo-echo-ingress

namespace: demo-echo

spec:

rules:

- host: demo.echo.test

http:

paths:

- path: /

backend:

serviceName: webshell

servicePort: 80

然后创建一个设置了相同 host 和 path 的 ingress B,Ingress B 指向了另一个服务 Service B,并且在 annotations 中注明这是一个 canary ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "version"

nginx.ingress.kubernetes.io/canary-by-header-value: "canary"

nginx.ingress.kubernetes.io/canary-by-cookie: "canary-cookie"

nginx.ingress.kubernetes.io/canary-weight: "50"

nginx.ingress.kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/upstream-fail-timeout: "10"

nginx.ingress.kubernetes.io/upstream-max-fails: "2"

name: demo-echo-ingress-canary

namespace: demo-echo

spec:

rules:

- host: demo.echo.test

http:

paths:

- path: /

backend:

serviceName: echo

servicePort: 80

带有 “version: canary” 头的请求都被发送到 canary 版本:

curl -H "version: canary" -H "Host: demo.echo.test" 10.10.64.58

相关参数为:

nginx.ingress.kubernetes.io/canary-by-header: "version"

nginx.ingress.kubernetes.io/canary-by-header-value: "canary"

不带有 “version: canary” 头的请求一半被转发给 canary 版本,相关参数为:

nginx.ingress.kubernetes.io/canary-weight: "50"

还支持按照 cookie 选择,cookie 的值为 always 或者 never,前者转发给 canary,后者不转发,指定 cookie 名称的参数为 :

nginx.ingress.kubernetes.io/canary-by-cookie: "canary-cookie"

curl -v -b canary-cookie=always demo.echo.test # 访问金丝雀版本

curl -v -b canary-cookie=never demo.echo.test # 访问非金丝雀版本

header、cookie、weight 的作用顺序是:canary-by-header -> canary-by-cookie -> canary-weight。

参考

https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/#canary